BigQuery Public Dataset

Blockchain data indexing in NEAR Public Lakehouse is for anyone wanting to understand blockchain data. This includes:

- Users: create queries to track NEAR assets, monitor transactions, or analyze on-chain events at a massive scale.

- Researchers: use indexed data for data science tasks, including on-chain activities, identifying trends, or feeding AI/ML pipelines for predictive analysis.

- Startups: can use NEAR's indexed data for deep insights on user engagement, smart contract utilization, or insights across tokens and NFT adoption.

Benefits:

- NEAR instant insights: Historical on-chain data queried at scale.

- Cost-effective: eliminate the need to store and process bulk NEAR protocol data; query as little or as much data as preferred.

- Easy to use: no prior experience with blockchain technology is required; bring a general knowledge of SQL to unlock insights.

Getting started

- Login into your Google Cloud Account.

- Open the NEAR Protocol BigQuery Public Dataset.

- Click in the VIEW DATASET button.

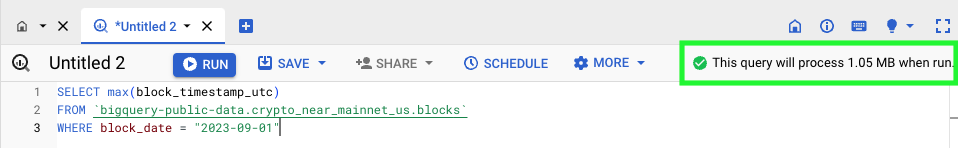

- Click in the + to create a new tab and write your query, click in the RUN button, and check the

Query resultsbelow the query. - Done :)

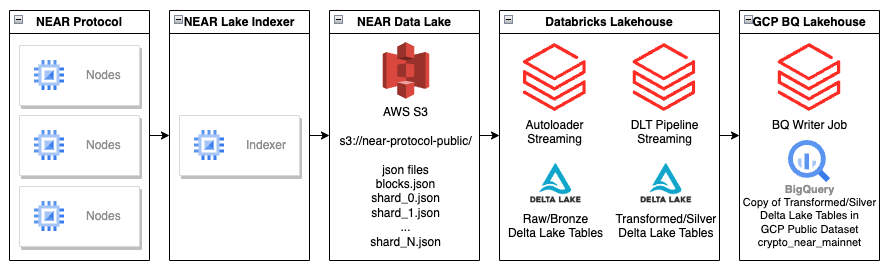

The NEAR Public Lakehouse repository contains the source code for ingesting NEAR Protocol data stored as JSON files in AWS S3 by NEAR Lake Indexer.

Example Queries

- How many unique signers and accounts have interacted with my smart contract per day?

SELECT

ra.block_date collected_for_day,

COUNT(DISTINCT t.signer_account_id) as total_signers,

COUNT(DISTINCT ra.receipt_predecessor_account_id) as total_accounts

FROM `bigquery-public-data.crypto_near_mainnet_us.receipt_actions` ra

JOIN `bigquery-public-data.crypto_near_mainnet_us.receipt_origin_transaction` ro ON ro.receipt_id = ra.receipt_id

JOIN `bigquery-public-data.crypto_near_mainnet_us.transactions` t ON ro.originated_from_transaction_hash = t.transaction_hash

WHERE ra.action_kind = 'FUNCTION_CALL'

AND ra.receipt_receiver_account_id = 'social.near' -- change to your contract

GROUP BY 1

ORDER BY 1 DESC;

How much it costs?

- NEAR pays for the storage and doesn't charge you to use the public dataset.

To learn more about BigQuery public datasets check this page.

- Google GCP charges for the queries that you perform on the data. For example, in today's price "Sep 1st, 2023" the On-demand (per TB) query pricing is $6.25 per TB where the first 1 TB per month is free.

Check Google's pricing page for detailed pricing info, options, and best practices.

You can check how much data it will query before running it in the BigQuery console UI. Again, since BigQuery uses a columnar data structure and partitions, it's recommended to select only the columns and partitions (block_date) needed to avoid unnecessary query costs.

Architecture

The data is loaded in a streaming fashion using Databricks Autoloader into raw/bronze tables, and transformed with Databricks Delta Live Tables streaming jobs into cleaned/enriched/silver tables.

The silver tables are also copied into the GCP BigQuery Public Dataset.

Available Data

The current data that NEAR is providing was inspired by NEAR Indexer for Explorer.

NEAR plans to improve the data available in the NEAR Public Lakehouse making it easier to consume by denormalizing some tables.

The tables available in the NEAR Public Lakehouse are:

- blocks: A structure that represents an entire block in the NEAR blockchain.

Blockis the main entity in NEAR Protocol blockchain. Blocks are produced in NEAR Protocol every second. - chunks: A structure that represents a chunk in the NEAR blockchain.

Chunkof aBlockis a part of aBlockfrom aShard. The collection ofChunksof theBlockforms the NEAR Protocol Block.Chunkcontains all the structures that make theBlock:Transactions,Receipts, andChunk Header. - transactions:

Transactionis the main way of interaction between a user and a blockchain. Transaction contains: Signer account ID, Receiver account ID, and Actions. - execution_outcomes: Execution outcome is the result of execution of

TransactionorReceipt. In the result of the Transaction execution will always be a Receipt. - receipt_details: All cross-contract (we assume that each account lives in its own shard) communication in Near happens through Receipts. Receipts are stateful in a sense that they serve not only as messages between accounts but also can be stored in the account storage to await

DataReceipts. Each receipt has apredecessor_id(who sent it) andreceiver_idthe current account. - receipt_origin: Tracks the transaction that originated the receipt.

- receipt_actions: Action Receipt represents a request to apply actions on the

receiver_idside. It could be derived as a result of aTransactionexecution or anotherACTIONReceipt processing. Action kind can be:ADD_KEY,CREATE_ACCOUNT,DELEGATE_ACTION,DELETE_ACCOUNT,DELETE_KEY,DEPLOY_CONTRACT,FUNCTION_CALL,STAKE,TRANSFER. - receipts (view): It's recommended to select only the columns and partitions (

block_date) needed to avoid unnecessary query costs. This view join the receipt details, the transaction that originated the receipt and the receipt execution outcome. - account_changes: Each account has an associated state where it stores its metadata and all the contract-related data (contract's code + storage).

- Skipped Blocks: NEAR Blockchain can contain skipped blocks, e.g. block

57730443. For these cases we can find the block for the chunk data using theprev_block_hashcolumn, e.g.SELECT * FROM chunks c JOIN blocks b ON c.chunk.header.prev_block_hash = b.header.prev_hash.